case study

Company

Qualifi

date

responsibilities

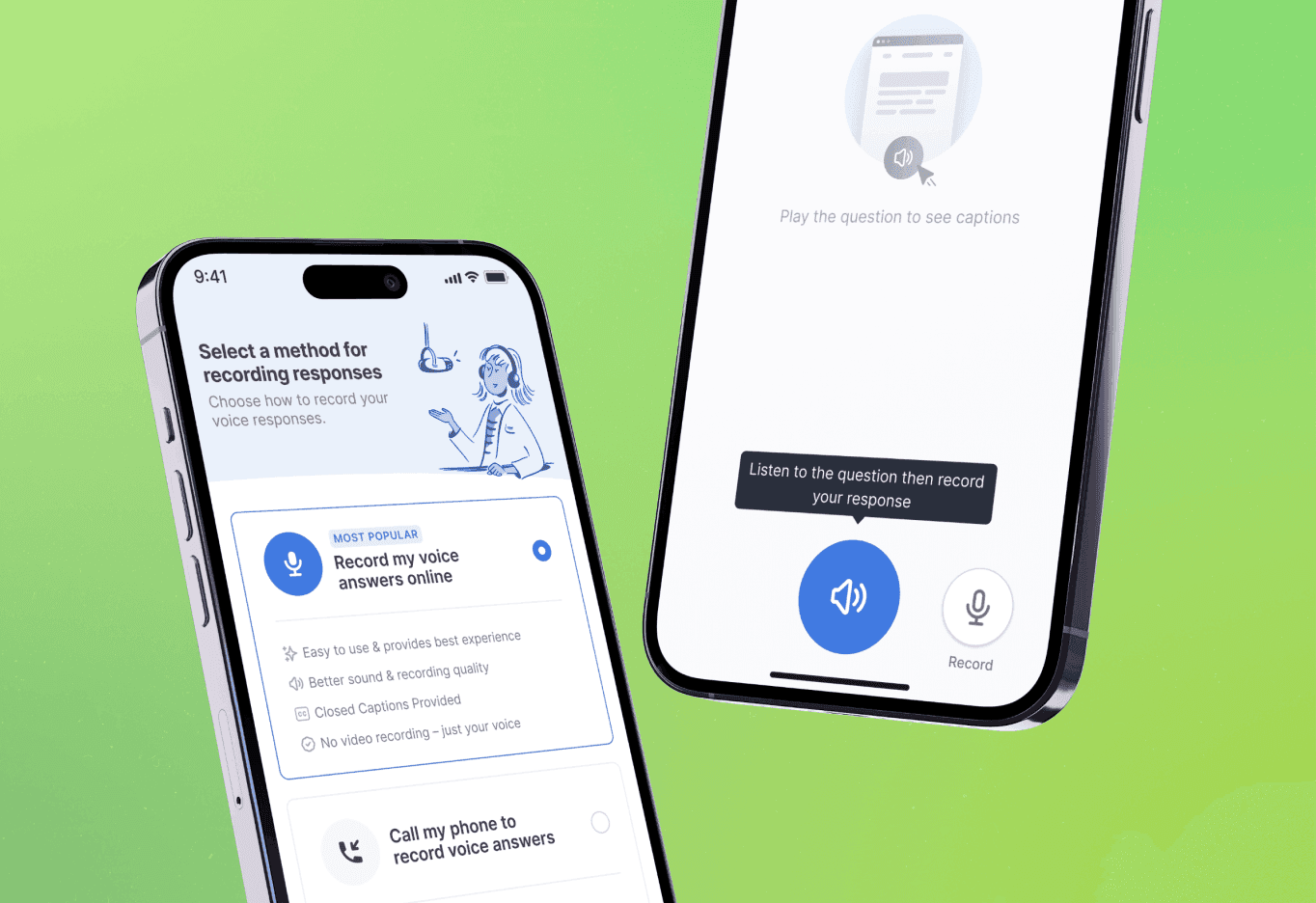

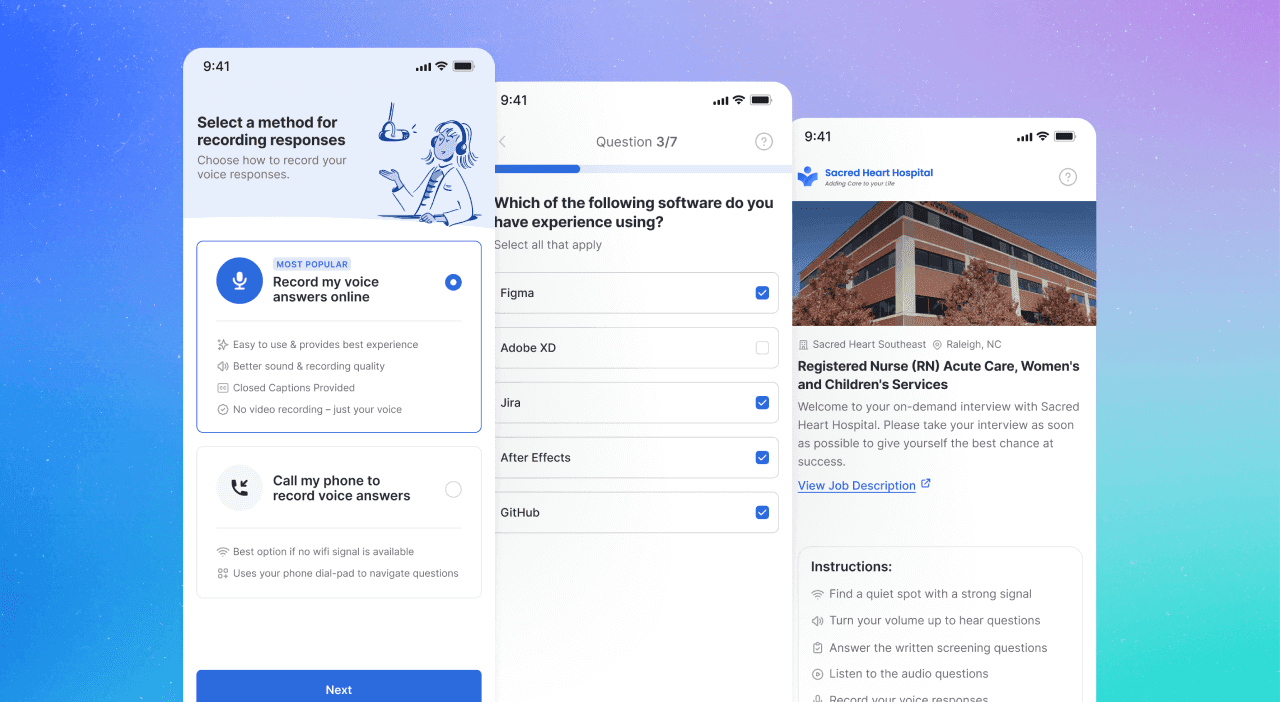

User research, usability testing, prototyping, product analytics, UI design

Team

DeSean Prentice, ENG Lead

Keenan Jaenicke, Product Manager

Michael Crenshaw, Senior Engineer

Emma Zachocki, Customer Success Manager

Cameron Campbell, Sr. Product Designer

Project Overview

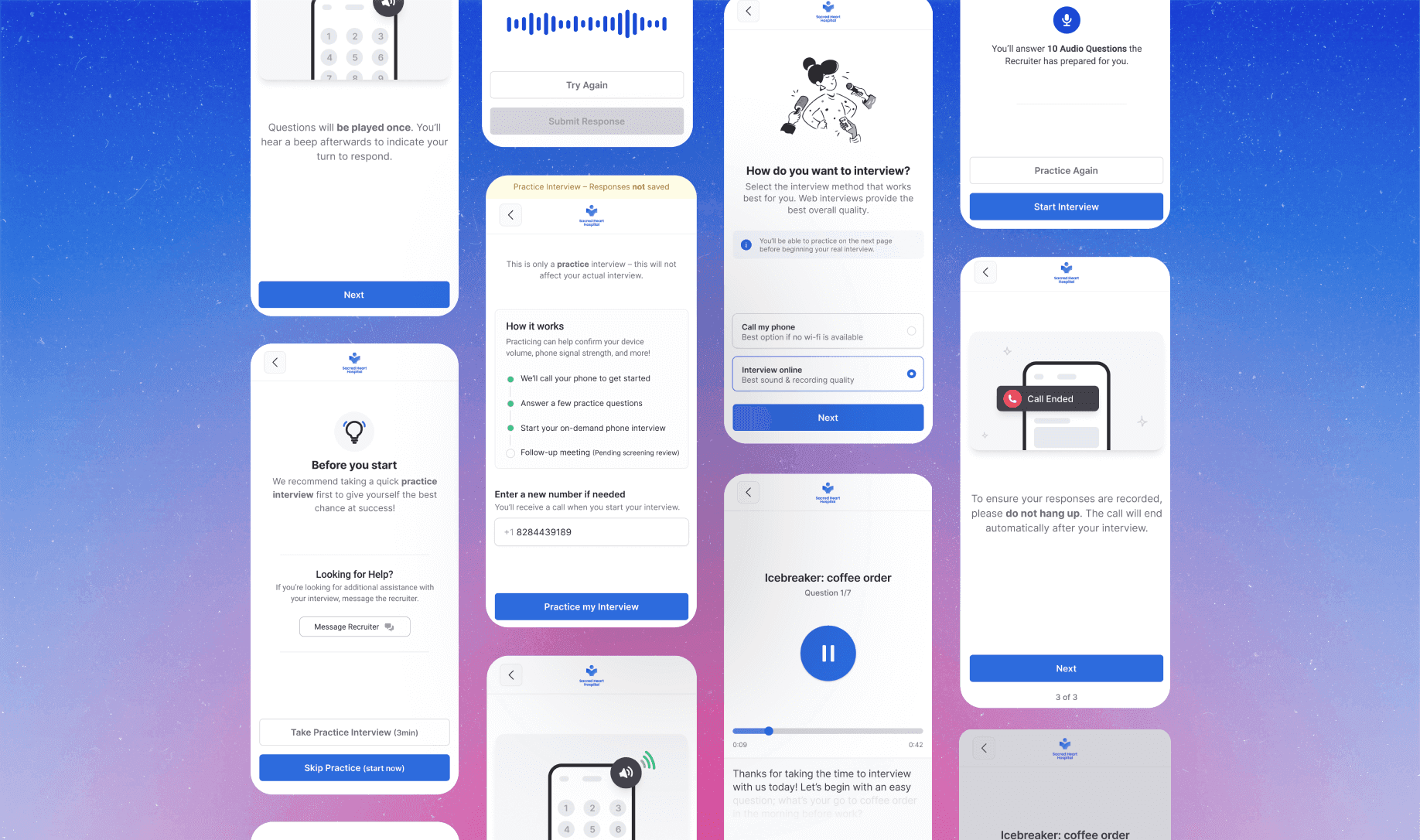

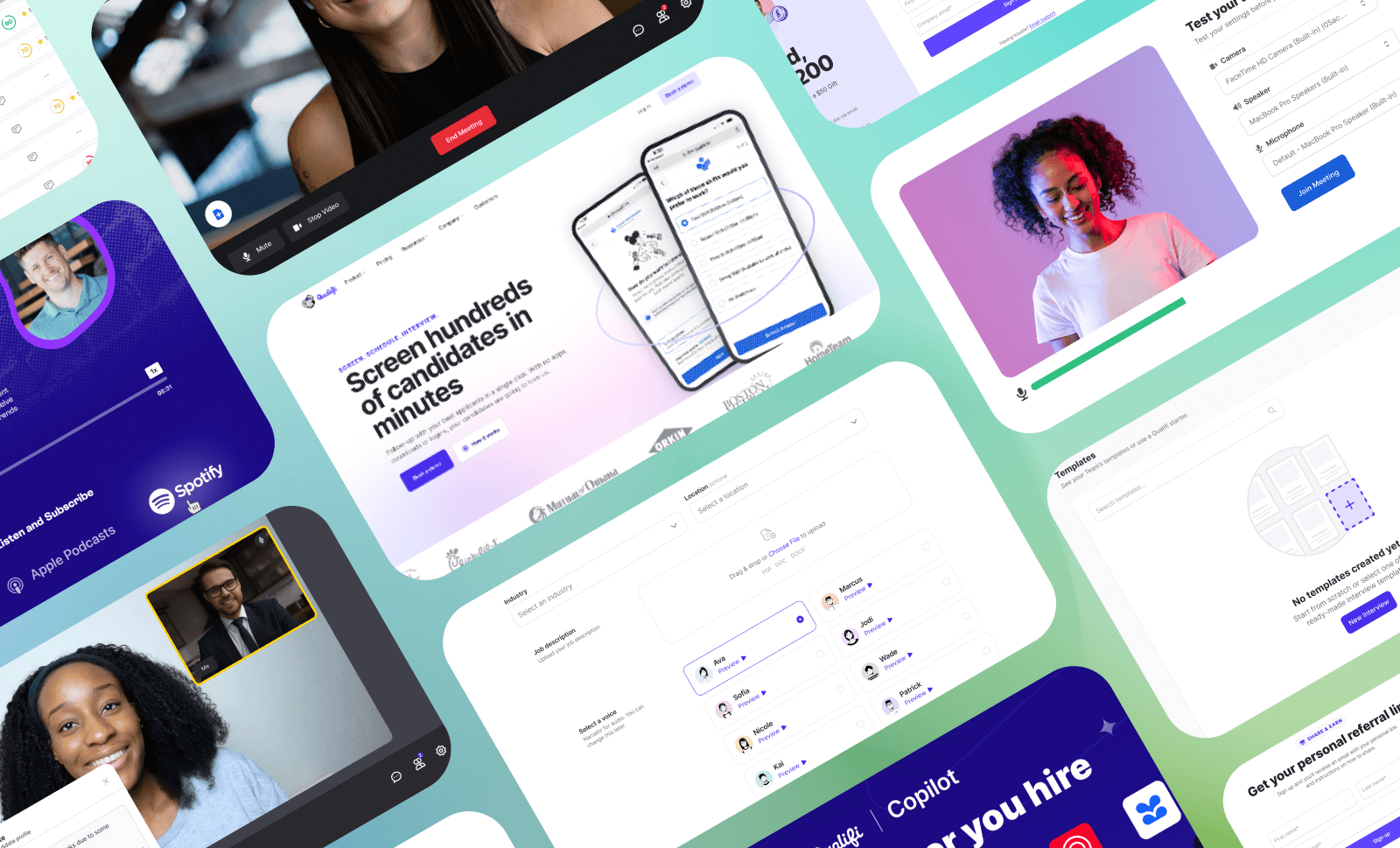

Qualifi is a high-volume recruiting platform for industries such as healthcare, retail, and manufacturing. HR teams at orgs like Mutual of Omaha and Orkin Pest Control use it to reduce time-to-hire and do more with less.

Dive into how we reimagined high-volume recruiting, boosting candidate feedback scores from 81% to 93% and increasing conversion rates by 8% across 100,000+ interviews 👇

The Challenge

High-volume recruiting is a game of speed. Think Sonic the Hedgehog, but HR. Sounds fun, eh? Myself (and Qualifi) faced a critical challenge: how to streamline the hiring process while reducing bias and allowing teams to maintain a high level of personal touch with a large pool of candidates.

In my role as Senior Product Designer, I spearheaded the design of an innovative audio-only screening solution that not only addressed these issues but also propelled Qualifi to secure $4.5 million in seed funding.

The Process

My design process focused on three key user groups:

Recruiters and TA Teams: Needing tools to streamline their workflow and reduce time-to-hire.

Hiring Managers: Not in the app often, but they need to review candidates.

Candidates: Desiring a fair, accessible, and user-friendly application process.

To fully grasp the complexities of high-volume recruiting, I needed to implement a multi-faceted research approach:

User Interviews

I conducted bi-weekly user interviews (as well as surveys and un-moderated tests) with recruiters to uncover pain points, needs & desires.

Data Analysis

Leveraged analytics from tools like Datadog and Sentry to identify usage patterns, and inform product decisions.

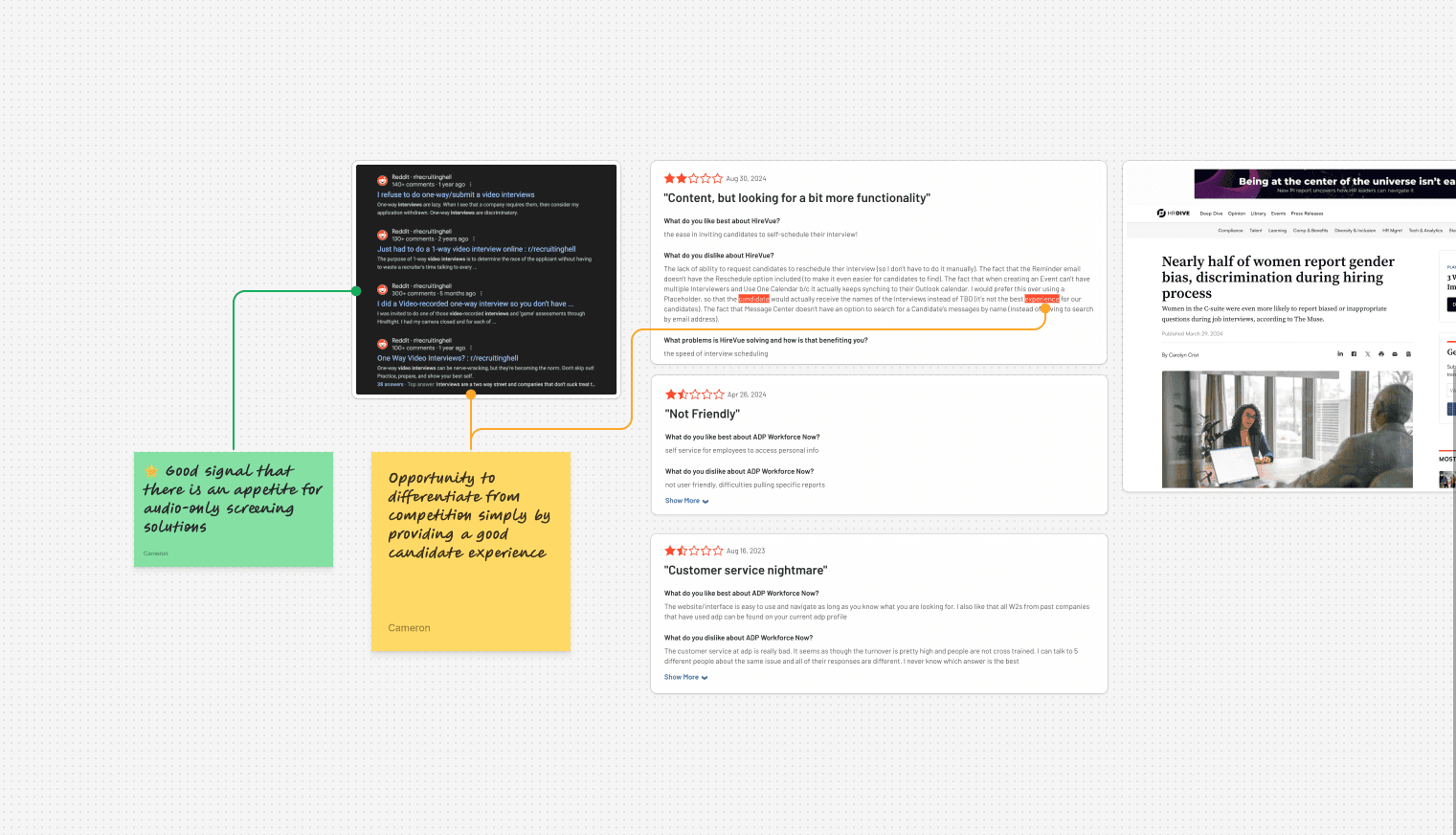

Competitive Analysis

Researched & documented existing solutions to identify gaps and opportunities. Not merely feature parity, either. We were looking for places others had dropped the ball, so we could pick it up.

Competitor reviews on sites like G2 & Capterra

Competitor website/YouTube/Help Forums

Candidate feedback

I gathered direct and indirect feedback from job candidates.

r/recruitinghell on Reddit

Our own internal data collected from 150k+ interviews

This includes direct feedback Candidates can optionally leave for Qualifi

Secondary feedback from recruiters (what they hear from candidates about the process)

News articles like HR Dive

The Research

The research revealed a critical insight: the need for a flexible system that could align candidate needs with recruiters' goals; how could we make it seamless for candidates to interview (and give them the best chance of success) while also meeting the needs of hiring teams who need to save time at every step of the hiring process?

Potential buyers will run away from a pain twice as fast they run towards a gain.

The Results

By listening to the insights and implementing targeted strategies, we achieved significant improvements in the recruitment process. Key results for this project were:

Increased candidate feedback score from 81% to 93%

Boosted overall candidate conversion by 8% across over 50 organizations and 100,000 interviews

Decreased candidate support tickets by 11%

What I Learned

While working on this project, I learned some important things that changed how I design and build things. Here are the main lessons I learned:

Data-driven design decisions > “going with your gut”

I used to hear things like “x user type doesn't use y feature” but by starting to bring real data into these conversations, I was able to make better informed decisions.

Regular user testing and iteration is key for successful products

Eric Ries (Lean Startup) said one of my favorite quotes about user testing: “Data are people too”. While I've found comfort in turning to data for UX answers & insights, this project was a good example of the power in just talking with users, and that attitudinal != behavioral (users may say one thing but do another).